nlp_intro

4. History of language modelling

Explanations, formulas, visualisations:

- Lena Voita’s blog: Language Modelling

- Eisenstein 6 (ignore the details of NN architectures for now)

- Jurafsky-Martin 3

- Goldberg / Norvig example notebook

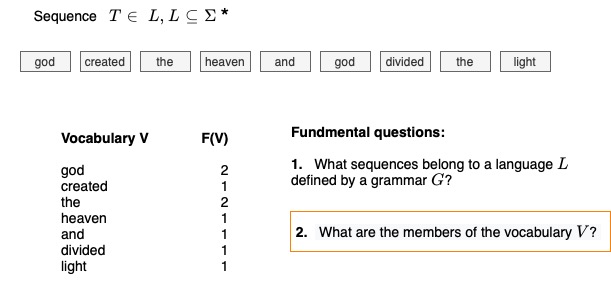

Language as a stochastic process

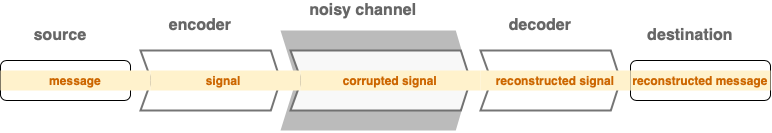

A way of modelling language computationally is to view it as a code sequence, that is a sequence of symbols generated by an encoder in the sense of information theory. By observing the sequences and looking for regularities in it, we model the encoder.

Initially, this approach to language modelling concerned only the form of language, that is the rules of grammar. A famous example showing the difference between the form and the content is the following sentence by Noam Chomsky:

Colorless green ideas sleep furiously.

We judge this sentence as correctly formed, although it does not mean anything. If we would strip off the formal units, the sentence would sound like this:

Color green idea sleep furious.

Or we could give all the words the same form:

Colorless greenless idealess sleepless furiousless.

Or we could shuffle the order:

Sleep colorless furiously ideas green.

Sleep color furious idea green.

Sleepless colorless furiousless idealess greenless.

Only the first sentence is grammatical, all the other versions are not. When we say we model the form of language, we make predictions on what combinations of words (or subword units) are grammatical regardless of whether these sequences have a meaning.

Statistical or n-gram models

We model the form of sequences by calculating the probability of a sequence.

In statistical modelling, we decompose sequences into n-grams. The probability of the sequence is a product of the n-gram probabilities, which are estimated on a large corpus using n-gram counts (maximum likelihood estimation).

We typically do not model the subword structure in statistical models, word-level tokens are taken as discrete, atomic units. What n-gram modelling shows is the probability of joint occurrence of several words in a given order. The order of words is thus the main aspect of grammaticality targetted by statistical models.

Modelling the probability of a sequence in this way was useful for speech-to-text conversion, machine translation and grammar checking.

Estimating n-gram probabilities is harder than it sounds - the longer the n-gram, the harder the estimation. Real statistical models employ various techniques to overcome the problem of estimation:

- Smoothing: re-distributing the probability over unobserved items

- Back-off: combining the probabilities of different n-gram orders

- Out-of-vocabulary words (OOV): we can never have a finite word-level vocabulary.

The main limitation is that the decomposition of texts into n-grams is too simplistic: not possible to capture long-distance dependences.

Early neural language models

Neural networks started being used for estimating the probability of sequences in early 2000s. At that time, the task was seen exactly as before: scoring the grammaticality of a sequence of words. The first models were recurrent networks (RNNs) trained on the task of predicting the next word.

The most important difference between statistical and (early) neural models:

- Full history: because the hidden recurrent states contain the information on the history of a sequence, there is no need to decompose into n-grams

- Representation (embedding): to predict the next word, neural networks need to represent the words in a continuous feature space

The last point was crucial for generalising the use of language models beyond grammaticality - the representations are the content. So, in order to predict the next word, we actually model the meaning of all words, not just the form of the sequence.

An important notion for further developments was self-supervision: to train a neural language model we do not need human annotation. Words naturally occurring in text are the only information we need. The model tries to predict a word and then it can easily check whether it succeeded or not.

Important: Self-supervision doesn’t mean that neural language models are unsupervised, neural networks cannot learn without supervision.

Masked language modelling (MLM)

The next step towards modern large models was abandoning the idea of predicting the next word. Instead, we look into both left and right context and predict a target word. This is what brought to the language modelling objective masked language modelling (MLM), which was very popular until recently. Note that taking into account both directions is only possible in encoding. When we use language models to generate text (decoding), we can only look into the left context.

The word2vec set of algorithms can be seen as one of the early implementations of the MLM idea, introduced in 2013. It is also the first implementation of modelling the meaning of words by means of a language model. An important difference compared to the contemporary models is that it models individual words: each word gets a vector representation, which is, in some sense, the sum (or the average) of all uses of that word in the training corpus (all contexts are collapsed into a single representation).

The next step was modelling subword units (GloVe).

BERT-like models were the main technology behind transfer-learning for a few years after they were introduced in 2019. They model subword sequences resulting in contextual, or dynamic embeddings: each subword gets a different representation for each distinct context in which it appears.

Large language models (LLMs)

Although BERT-like models can be considered large, what is currently understood under the term Large Language Models (LLMs) are decoder-type models, also known as Generative Pretrained Transformers (GPT). These models are similar to early neural models in that they predict the next word (not a masked target), given the previous sequence.

The main difference compared to the early neural models:

- The network architectureL: Transformers instead of RNNs

- More feedback taken into account via transfer learning.

Statistical vs. neural

- Statistical still used in practice for ASR: fast and well understood

- Neural models are used for other text generation tasks: machine translation, summarisation, robot-writers, but they are hard to deploy in dynamic use cases.